MMT: Exploiting Fine-Grained Parallelism in Dynamic Memory Management, Tiware et al. is worth reading if you are interested in systems-level performance programming. If you work in a managed environment, the briefly described lockless protocol for request-reply queues is worth noting.

This paper describes the use of a "Memory Management Thread" (MMT) to automatically parallelize memory management in C/C++ programs. In allocation-intensive domains (often domains that involve traversing or transforming big graphs -- constraint programming, parsing and translation, optimization problems, etc.), memory management overhead can be around 30% of execution time.

The fundamental challenge is that memory management is very fine-grained and that communication and coordination between cores and processors can easily introduce more overhead than is gained by "easy wins" like asynchronous deallocation.

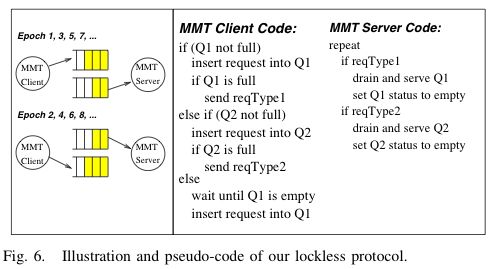

The improvement appears to come from speculative bulk allocation and bulk de-allocation. What makes the "speculation" hard is synchronization and making sure that you avoid too much contention. Their win in this area appears to come from an elegant approach: dual request and reply queues access to which is ping-ponged between the client and server.

Their benchmark numbers are good.