I'm a big fan of using a pair of identical networks to create output vectors that are then fed into a network that is trained to judge whether it's two input vectors are the same or different.

This is a powerful technique for "low k-shot" comparisons. Most ML techniques that are trying to identify, say, "photos of _my_ cat," require lots of examples of both the general category and perhaps dozens or hundreds of photos of my specific cat. But with twin networks, you train the discriminator portion to tell whether one input is from the same source as the other. Those two inputs are generated from two sub-networks that share the same weights.

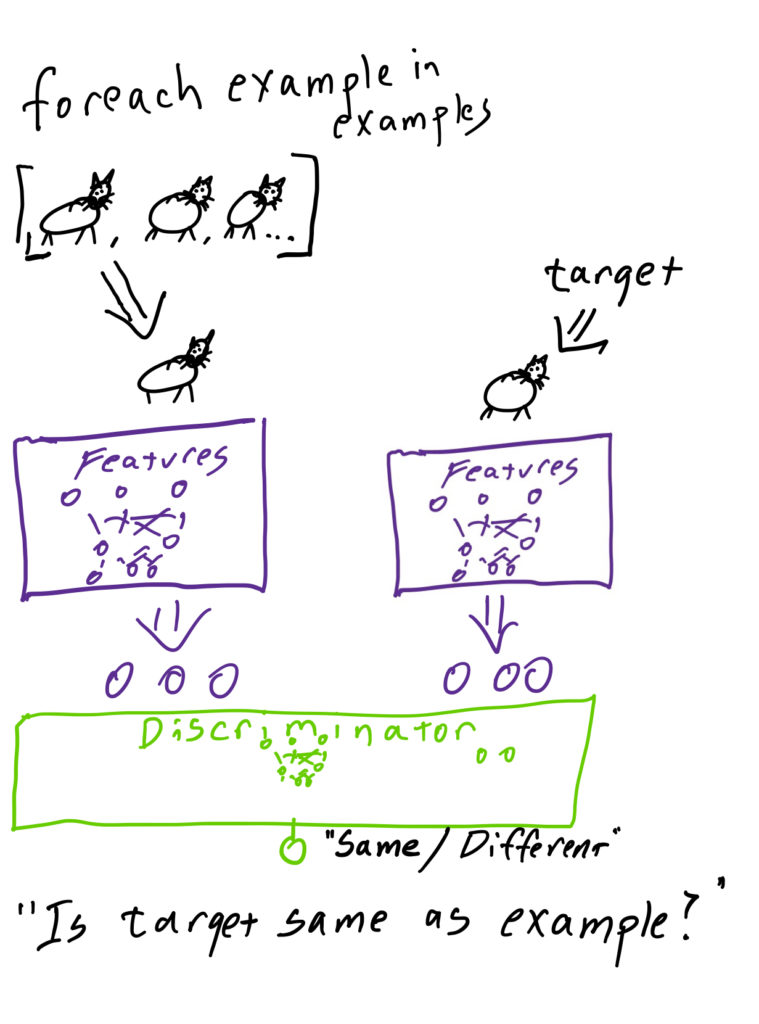

[caption id="attachment_6265" align="alignnone" width="584"] A twin network for low k-shot identification[/caption]

A twin network for low k-shot identification[/caption]

Since training propagates backwards, for the discriminator to succeed, it needs "features that distinguish a particular cat." And since the weights that generate those inputs are shared, training (if it's successful) creates a "Features" sub-network that essentially extracts a "fingerprint useful for discriminating."

To inference with a twin network, then, you hold your target data constant in one network and iterate over your exemplar dataset. For each example in examples, you get a "similarity to target" rating that you can use for final processing (perhaps with a "That looks like a new cat!" threshold or perhaps with a user-reviewed "Compare the target with these three similar cats" UX, etc.).

As I said, I'm a big fan of this technique and it's what I've been using in my whaleshark identification project.

However, there's one unfortunate thing about this technique, which is that it was labeled as a "Siamese network" back in the day. This is a reference to the term "Siamese twins," which is an archaic and potentially offensive way to refer to conjoined twins.

It would be a shame if this powerful technique grew in popularity and carried with it an unfortunate label. "Twin networks" is just as descriptive and not problematic.