One of my big weekend projects (for longer than I care to think) has been trying to create a pipeline for identifying individual whalesharks from photos. The project had kind of grown moribund as I repeatedly failed to get any decent level of recognition despite using what I thought was a good technique: An algorithm lifted from astrophotography that uses the angles between stars. Individual whalesharks have a unique constellation of spots and whaleshark researchers have used that algorithm (with a lot of manual work) to successfully ID whalesharks in their catalogs.

But when I tried to build an ML system that went from photos to meshes to histograms-of-angles I just couldn't get any traction. I'd back-burnered the project until, on Christmas Day of this year, I actually saw my first whaleshark in the wild. (For some reason, it's been an incredible year for whalesharks in Hawaii. Climate change? Fluke?)

With fresh eyes on the problem, I decided to create a synthetic dataset that would allow easier experimentation. The result is what I call 'Leopard Spheres' -- basically, randomly spotted spheres from which I could generate the histogram of angles that are the input to the algorithm.

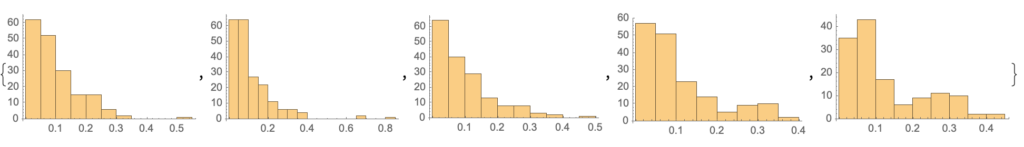

With the leopard spheres in hand, I quickly became disillusioned with the astrophotography algorithm. While it's robust against rotation in the XY plane and small rotations in the Z plane, the data really jump around with the larger rotations that are typical when photographing an animal (even binning the photographs into sectors).

So I've more or less decided to abandon the "bag of angles" algorithm and use a convolutional neural network over the Delaunay mesh that I generate from the spots and use that in a twin network for identification. The big question is whether even that amount of preprocessing is over-thinking the problem. I'll let you know.